Cloud quotas: How to make cloud management easy

In the past, a cloud architect's pain point was usually deciding between these two options:

To tackle this confusion, major cloud service providers (CSPs) launched quotas (in their own words). To give you examples, here are the different terminologies used by the three major public CSPs:

Application monitoring for businesses of every size

Can application performance truly influence business outcomes? The numbers say it all. Amazon’s 2024 annual report revealed a staggering $638 billion in revenue —and by its own benchmark, a mere 100 millisecond delay could cost Amazon 1% in sales, equating to a potential $6.38 billion loss. Now imagine the scale of financial impact on organiz...

A complete guide to monitoring cross-platform apps using APM

Cross-platform environments are crucial for businesses aiming to optimize development costs while reaching a broader audience. By leveraging shared codebases and frameworks, organizations can maintain a consistent user experience across platforms without the need for redundant development efforts. They enhance operational efficiency by offeri...

Windows 10 end of life: Strategy for IT teams

If you use Windows 10 after October 14, 2025, your devices will continue to function normally, but Microsoft will not provide additional feature updates or security patches to Windows 10 devices, leaving them prone to attacks. In short, if you use Windows 10 after October 14, 2025, Microsoft will not:

Sysadmins and chief technology offi...

Leveraging AI for enhanced network monitoring in finance

Why do you need an application monitoring tool?

An APM tool should work for you, not the other way around. That’s why Site24x7 stands out as one of the top application monitoring tools of 2025 —combining ease of use, deep insights, and unmatched value to support modern IT environments.

By integrating Site24x7's APM into your IT strategy, you can ensure a seamless digital experien...

How a cloud-based SaaS platform like Site24x7 makes network monitoring easy

The importance of proactive event handling in modern IT observability

Events are actionable signals derived from observability's core pillars—metrics, traces, and logs—that are converged to deliver end-to-end visibility across your tech stack. They can be used to flag operational shifts or anomalies, such as server crashes, traffic surges, or sluggish database queries. Events from metrics highlight latency,...

Addressing configuration management in legacy network systems

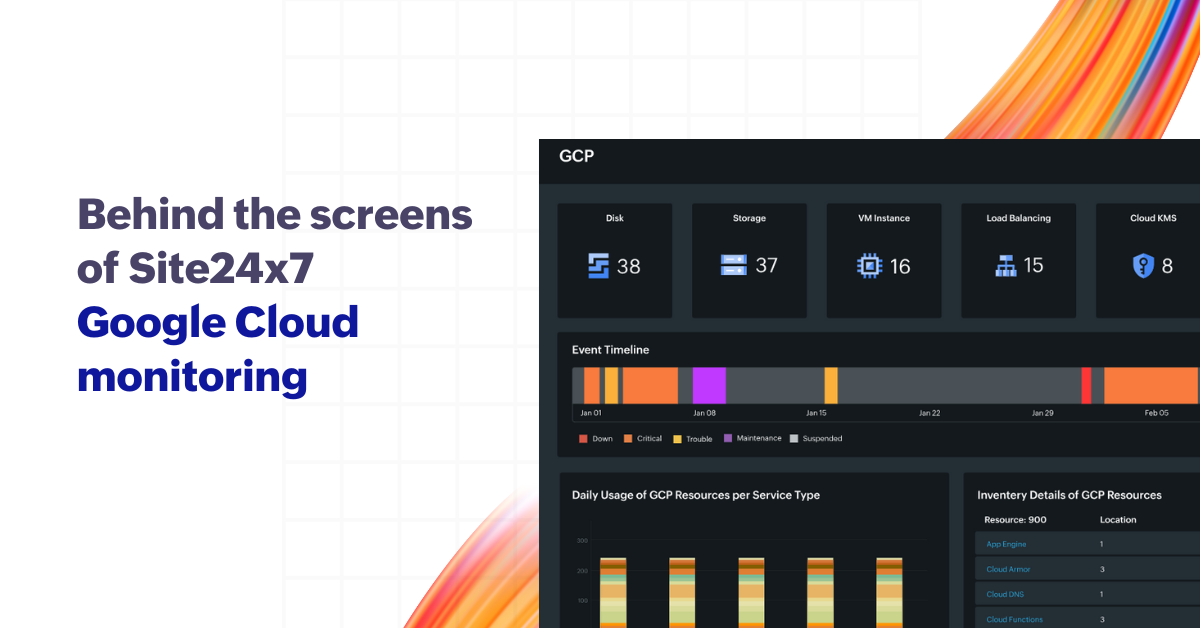

Behind the screens: Site24x7’s Google Cloud Monitoring architecture

Monitoring your vast cloud environments is an important aspect of achieving such performance. Site24x7 Google Cloud Monitoring has been an indispensable tool for you and thousands of IT professionals to maintain the health and availability of Google Cloud resources.

Have you ever wanted to know how Site24x7 does it without breaking a sw...